Publications

-

Noise Correlations Induce Drift in EI networks with Plasticity

Michelle Miller, Christoph Miehl, Brent Doiron

Paper in Progress

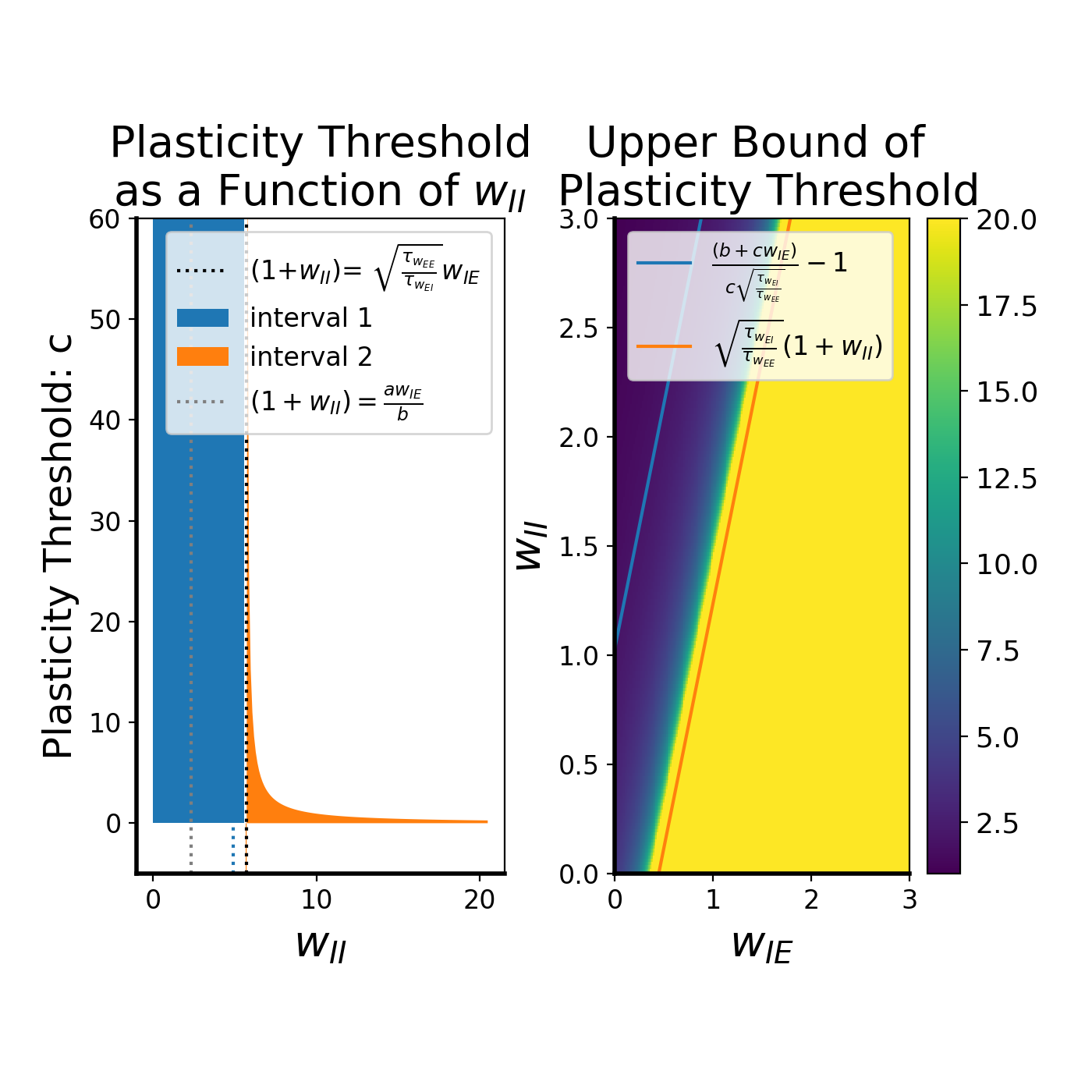

We investigated the dynamics, of a two population recurrent rate network with excitatory (E) plasticity and inhibitory (I) plasticity. Previous analysis has focused on feedforward coupled models with excitatory to excitatory (EE) and inhibitory to excitatory plasticity (EI), we consider the learning dynamics in a fully recurrent network. By assuming slow plasticity dynamics relative to the firing rate dynamics, we imposed conditions that the E to E weights and the I to E weights must satisfy to produce stable firing rates. This allowed us to calculate the conditions of stability for the weight dynamics under these constraints, which gives an upper bound on the plasticity threshold in both models. We further find a regime in parameter space in which the plasticity threshold is unbounded in which a.stable line attractor in weight space appears. Further, we found that incorporating noise into the rate model and into a spiking model both induce a drift in the learned weights. This drift is mitigated when the noise correlations are shared between the E aed I population, This suggests a potential easy mechanism to mitigate drift in learned synaptic weights. Taken together, we show analytically how rate and weight stability in a recurrent network of excitatory and inhibitory neurons depends on the model parameters.

-

Fading Ensembles supports memory-updating During Task Updating

(2024 -Poster at Society for Neuroscience Meeting 2023 ; Paper In Progress)

Authors: Michelle Miller **, Austin M. Baggetta**, Zhe Dong , Brian M. Sweis , Denisse Morales-Rodriguez , Zachary T. Pennington , Taylor Francisco , David J. Freedman , Mark G. Baxter, Tristan Shuman , Denise J. Cai

** Implies equal contribution

Memory updating is critical in dynamic environments because updating memories with new information promotes versatility. However, little is known about how memories are updated with new information. To study how neuronal ensembles might support memory updating, we used a hippocampus-dependent memory updating task to measure hippocampal ensemble dynamics when mice switched navigational goals. Using Miniscope calcium imaging, we identified neuronal ensembles (co-active neurons) in dorsal CA1 that were spatially tuned and stable across training sessions. When reward locations were moved during an updating test, a subset of these ensembles decreased their (co-activity?) activation strength, correlating with memory updating. These “fading” ensembles were a result of weakly-connected neurons becoming less co-active with their peers. Middle-aged mice were impaired in the updating task, and the prevalence of their remodeling ensembles correlated with their memory updating performance. Therefore, we have identified a mechanism where the hippocampus breaks down ensembles to support memory updating. To test this, we also developed a 1-layer hidden neural network with a reinforcement learning paradigm similar to the task-updating in the experiment. The model re-captures the behavior of the real animal by reaching peak hit rate, correct rejection rate, and discriminability index across sessions, which then drop and begin to improve again upon task switching. The network exhibits reward port over-representation. Further, upon reversal, we observe a negative correlation in the updated task performance and the number of units participating in stable ensembles.

-

Artificial Neuronal Ensembles with Learned Context Dependent Gating

Matthew J. Tilley, Michelle Miller & David J. Freedman

ICLR 2023

Biological neural networks can allocate different neurons for different memories, unlike traditional artificial neural networks which struggle with sequential learning and suffer from catastrophic forgetting. Introducing Learned Context Dependent Gating (LXDG), a method that mimics this biological capability. LXDG dynamically allocates and recalls artificial neuronal ensembles using gates, modulating hidden layer activities. It employs regularization terms to mimic biological properties, such as gate sparsity, recalling previous tasks, and ensuring orthogonal encoding for new tasks. LXDG alleviates catastrophic forgetting on continual learning benchmarks, surpassing Elastic Weight Consolidation (EWC) alone, notably on permuted MNIST. Additionally, LXDG shows that similar tasks recruit similar neurons, as seen in the rotated MNIST benchmark.

-

Divisive Feature Normalization Improves Image Recognition Performance in AlexNet

Michelle Miller, SueYeon Chung, Kenneth D. Miller

ICLR 2022

Local divisive normalization is a phenomenom observed in the brain in which adjacent normalize adjacent neural responses in visual cortical areas. It involves dividing the response of each neuron by a weighted sum of responses from neighboring neurons, which helps to enhance specific features in visual processing. In the context of the study, divisive normalization was applied between features in AlexNet, a convolutional neural network, to improve its performance in image recognition tasks. This adjustment consistently boosted the network's accuracy, especially when combined with other normalization techniques.